Python Slots Memory

- An OS-specific virtual memory manager carves out a chunk of memory for the Python process. The darker gray boxes in the image below are now owned by the Python process. Python uses a portion of the memory for internal use and non-object memory. The other portion is dedicated to object storage (your int, dict, and the like). Note that this was.

- Our first test will look at how they allocate memory. Our second test will look at their runtimes. This benchmarking for memory and runtime is done on Python 3.8.5 using the modules tracemalloc for memory allocation tracing and timeit for the runtime evaluation. Results may vary on your personal computer.

If you have lots of 'small' objects in a Python program (objects whichhave few instance attributes), you may find that the object overheadstarts to become considerable. The common wisdom says that to reduce this inCPython you need to re-define the classes to use __slots__, eliminatingthe attribute dictionary. But this comes with the downsides oflimiting flexibility and eliminating the use of class defaults. Wouldit surprise you to learn that PyPy can significantly, and withoutany effort by the programmer, reduce that overhead automatically?

But slots in the python exists from version 2.2. It's main purpose is memory optimisation because it allow to get rid of dict object, which created by interpreter for each instance of class. If you create many small objects, with predefined structure, and meet a memory limit, than slots can help you to overcome that limitation.

Let's take a look.

Contents

- What Is Being Measured

- Object Internals

- Objects With Slots

Contrary to advice, instead of starting at the very beginning,we'll jump right to the end. The following graph shows the peak memoryusage of the example program we'll be talking about in this postacross seven different Python implementations: PyPy2 v6.0, PyPy3 v6.0,CPython 2.7.15, 3.4.9, 3.5.6, 3.6.6, and 3.7.0 [1].

For regular objects ('Point3D'), PyPy needs less than 700MB to create10,000,000, where CPython 2.7 needs almost 3.5 GB, and CPython 3.xneeds between 1.5 and 2.1 GB [6]. Moving to __slots__ ('Point3DSlot')brings the CPython overhead closer to—but still higher than—that of PyPy. In particular, note that the PyPy memory usage isessentially the same whether or not slots are used.

The third group of data is the same as the second group, exceptinstead of using small integers that should be in the CPython internalinteger object cache [7], I used larger numbers that shouldn't becached. This is just an interesting data point showing the allocationof three times as many objects, and won't be discussed further.

What Is Being Measured

In the script I used to produce these numbers [2], I'm using theexcellent psutil library's Process.memory_info to record the'unique set size' ('the memory which is unique to a process and whichwould be freed if the process was terminated right now') before andthen after allocating a large number of objects.

This gives us a fairly accurate idea of how much memory the processesneeded to allocate from the operating system to be able to create allthe objects we asked for. (get_memory is a helper function thatruns the garbage collector to be sure we have the most stablenumbers.)

What Is Not Being Measured

In this example output from a run of PyPy, the AbsoluteUsage isthe total growth from when the program started, while the Delta isthe growth just within this function.

This was the first of the test runs within this particular process.The second test run within this process reports higher absolute deltassince the beginning of the program, although the overall deltas aresmaller. This indicates how much memory the program has allocated fromthe operating system but not returned to it, even though it maytechnically free from the standpoint of the Python runtime; thisaccounts for things like internal caches, or in PyPy's case, jittedcode.

Although I captured the data, this post is not about the startup orinitial memory allocation of the various interpreters, nor about howmuch can easily be shared between forked processes, nor about how muchmemory is returned to the operating system while the process is stillrunning. We're only talking about the memory size needed to allocate agiven number of objects, e.g., the Delta column.

Object Internals

To understand what's happening, let's look at the two types of objectswe're comparing:

These are both small classes with three instance attributes. One is astandard, default, object, and one specifies its instance attributesin __slots__.

Objects with Dictionaries

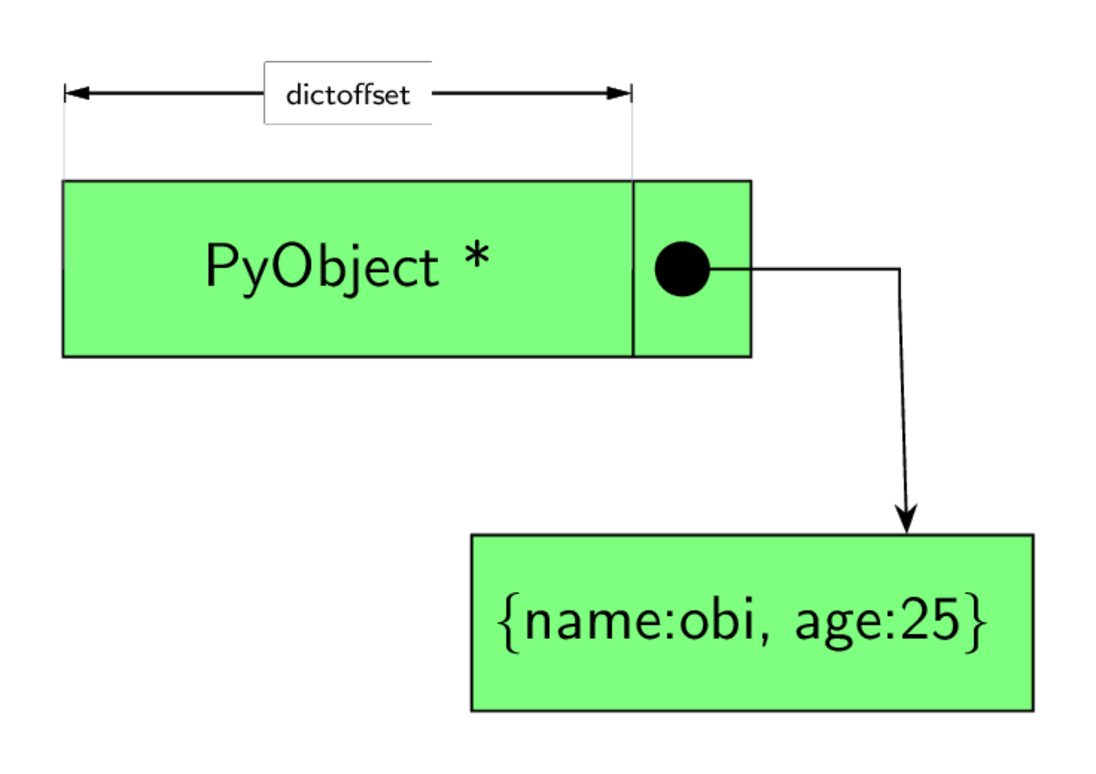

Standard objects, like Point3D, have a special attribute__dict__, that is a normal Python dictionary object that is usedto hold all the instance attributes for the object. We previouslylooked athow __getattribute__ can be used to customize all attributereads for an object; likewise, __setattr__ can customize allattribute writes. The default __getattribute__ and__setattr__ that a class inherits from object functionsomething like they were written to access the __dict__:

One advantage of having a __dict__ underlying an object is theflexibility it provides: you don't have to pre-declare your attributesfor every object, and any object can have any attribute, so itfacilitates subclasses adding new attributes, or even other librariesadding new, specialized, attributes to implement caching of expensivecomputed properties.

One disadvantage is that a __dict__ is a generic Pythondictionary, not specialized at all [3], and as such it has overhead.

On CPython, we can ask the interpreter how much memory any givenobject uses with sys.getsizeof. On my machine under a 64-bitCPython 2.7.15, a bare object takes 16 bytes, while a trivialsubclass takes a full 64 bytes (due to the overhead of being trackedby the garbage collector):

An empty dict occupies 280 bytes:

And so when you combine the size of the trivial subclass, with thesize of its __dict__ you arrive at a minimum object size of 344bytes:

A fully occupied Point3D object is also 344 bytes:

Because of the way dictionaries are implemented [8], there's always alittle spare room for extra attributes. We don't find a jump in sizeuntil we've added three more attributes:

Note

These values can change quite a bit across Python versions,typically improving over time. In CPython 3.4 and 3.5,getsizeof({}) returns 288, while it returns 240 in both 3.6 and3.7. In addition, getsizeof(pd.__dict__) returns 96 and 112[4]. The answer to getsizeof(pd) is 56 in all four versions.

Objects With Slots

Objects with a __slots__ declaration, like Point3DSlot do nothave a __dict__ by default. The documentation notes that thiscan be a space savings. Indeed, on CPython 2.7, a Point3DSlot hasa size of only 72 bytes, only one full pointer larger than a trivialsubclass (when we do not factor in the __dict__):

If they don't have an instance dictionary, where do they store theirattributes? And why, if Point3DSlot has three definedattributes, is it only one pointer larger than Point3D?

Slots, like @property, @classmethod and @staticmethod, areimplemented using descriptors. For our purpose, descriptors are away to extend the workings of __getattribute__ and friends. Adescriptor is an object whose type implements a __get__ method,and when that object is found in a type's dictionary, it is calledinstead of checking the __dict__. Something like this [5]:

When the class statement (indeed, when the type metaclass)finds __slots__ in the class body (the class dictionary), ittakes special steps. Most importantly, it creates a descriptor foreach mentioned slot and places it in the class's __dict__. So ourPoint3DSlot class gets three such descriptors:

Variable Storage

We've established how we can access these magic, hidden slottedattributes (through the descriptor protocol). (We've also establishedwhy we can't have defaults for slotted attributes in the class.) Butwe still haven't found out where they are stored. If they're not ina dictionary, where are they?

The answer is that they're stored directly in the object itself. Everytype has a member called tp_basicsize, exposed to Python as__basicsize__. When the interpreter allocates an object, itallocates __basicsize__ bytes for it (every object has a minimumbasic size, the size of object). The type metaclass arrangesfor __basicsize__ to be big enough to hold (a pointer to) each ofthe slotted attributes, which are kept in memory immediately after thedata for the basic object . The descriptor for each attribute,then, just does some pointer arithmetic off of self to read andwrite the value. In a way, it's very similar to howcollections.namedtuple works, except using pointers instead ofindices.

That may be hard to follow, so here's an example.

The basic size of object exactly matches the reported size of itsinstances:

We get the same when we create an object that cannot have any instancevariables, and hence does not need to be tracked by the garbagecollector:

When we add one slot to an object, its basic size increases by onepointer (8 bytes), and because that object could be tracked by thegarbage collector, this object needs to be tracked by the collector,so getsizeof reports some extra overhead:

Python Slots Memory Drive

The basic size for an object with 3 slots is 16 (the size of object) + 3 pointers, or 40.What's the basic size for an object that has a __dict__?

Hmm, it's 16 + 2 pointers. What could those two pointers be?Documentation to the rescue:

__slots__ allow us to explicitly declare data members (likeproperties) and deny the creation of __dict__ and__weakref__ (unless explicitly declared in __slots__...)

So those two pointers are for __dict__ and __weakref__, thingsthat standard objects get automatically, but which we have to opt-into if we want them with __slots__. Thus, an object with threeslots is one pointer size bigger than a standard object.

How PyPy Does Better

By now we should understand why the memory usage dropped significantlywhen we added __slots__ to our objects on CPython (although thatcomes with a cost). That leaves the question: how does PyPy get suchgood memory performance with a __dict__ that __slots__ doesn'teven matter?

Earlier I wrote that the __dict__ of an instance is just astandard dictionary, not specialized at all. That's basically true onCPython, but it's not at all true on PyPy. PyPy basically fakes__dict__ by using __slots__ for all objects.

A given set of attributes (such as our 'x', 'y', 'z' attributes forPoint3DSlot) is called a 'map'. Each instance refers to its map,which tells PyPy how to efficiently access a given attribute. When anattribute is added or deleted, a new map is created (or re-used froman existing object; objects of completely unrelated types, but havingcommon attributes can share the same maps) and assigned to the object,re-arranging things as needed. It's as if __slots__ was assignedto each instance, with descriptors added and removed for the instanceon the fly.

If the program ever directly accesses an instance's __dict__, PyPycreates a thin wrapper object that operates on the object's map.

So for a program that has many simalar looking objects, even ifunrelated, PyPy's approach can save a lot of memory. On the otherhand, if the program creates objects that have a very diverse set ofattributes, and that program frequently directly accessess__dict__, it's theoretically possible that PyPy could use morememory than CPython.

You can read more about this approach in this PyPy blog post.

Footnotes

| [1] | All 64-bit builds, all tested on macOS. The results on Linuxwere very similar. |

Monty Python Slots

| [2] | Available at this gist. |

| [3] | In CPython. But I'm getting ahead of myself. |

| [4] | The CPython dict implementation was completely overhauled inCPython 3.6.And based on the sizes of {} versus pd.__dict__ wecan see some sort of specialization for instancedictionaries, at least in terms of their fill factor. |

| [5] | This is very rough, and actually inaccurate in some small butimportant details. Refer to the documentation for the full protocol. |

| [6] | No, I'm not totally sure why Python 3.7 is such an outlierand uses more memory than the other Python 3.x versions. |

| [7] | See PyLong_FromLong |

| [8] | With a particular desired load factor. |